Google is introducing a couple of new updates for its AI services, including the addition of video overviews in NotebookLM along with the ability to ask questions about images and PDFs to AI mode on the web. Here’s everything you’d want to know about the new updates from Google for AI mode and NotebookLM.

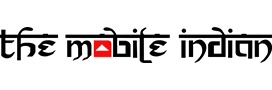

Video Overviews in NotebookLM

Rolling out now to all users in English, Video Overviews aim to make complex information easier to grasp through narrated slide presentations. The feature was first showcased at I/O earlier this year.

Positioned as a visual alternative to Audio Overviews, the feature uses an AI-generated host to walk users through custom slides enriched with visuals, diagrams, quotes, data points, and other elements pulled directly from their documents. This format is especially useful for explaining data-heavy content, illustrating processes, or breaking down abstract ideas.

Much like Audio Overviews, Video Overviews are highly customizable. Users can tailor the experience by specifying learning goals, areas of focus, and the target audience. Whether you’re a beginner looking to decode scientific diagrams or an expert needing a focused recap on a specific topic, the tool adapts accordingly as per Google. You can prompt it with questions ranging from “Help me understand the graphs” to “I’m familiar with X and Y—focus only on Z.”

Google says additional formats under the Video Overviews category are on the way, and support for more languages will roll out in the near future.

Aside from this, Google is giving NotebookLM’s Studio panel a major upgrade, introducing a cleaner design and the ability to create and store multiple outputs of the same type within a single notebook.

With the revamped Studio, users can now generate various outputs—Audio Overviews, Video Overviews, Mind Maps, and Reports—and manage them all in one place. Four distinct tiles at the top of the panel make it easy to start creating, while all saved content appears in a convenient list below.

This expanded flexibility enables new use cases:

- Public notebooks can feature Audio Overviews in different languages to reach a broader audience.

- Teams managing shared notebooks can create role-specific summaries to streamline communication.

- Students preparing for exams can generate chapter-wise Video Overviews or Mind Maps to better understand their study material.

To improve productivity, the Studio panel now supports multitasking. Users can, for instance, listen to an Audio Overview while reviewing a Mind Map or reading through a Report.

The redesigned Studio update will be rolling out to all users over the coming weeks.

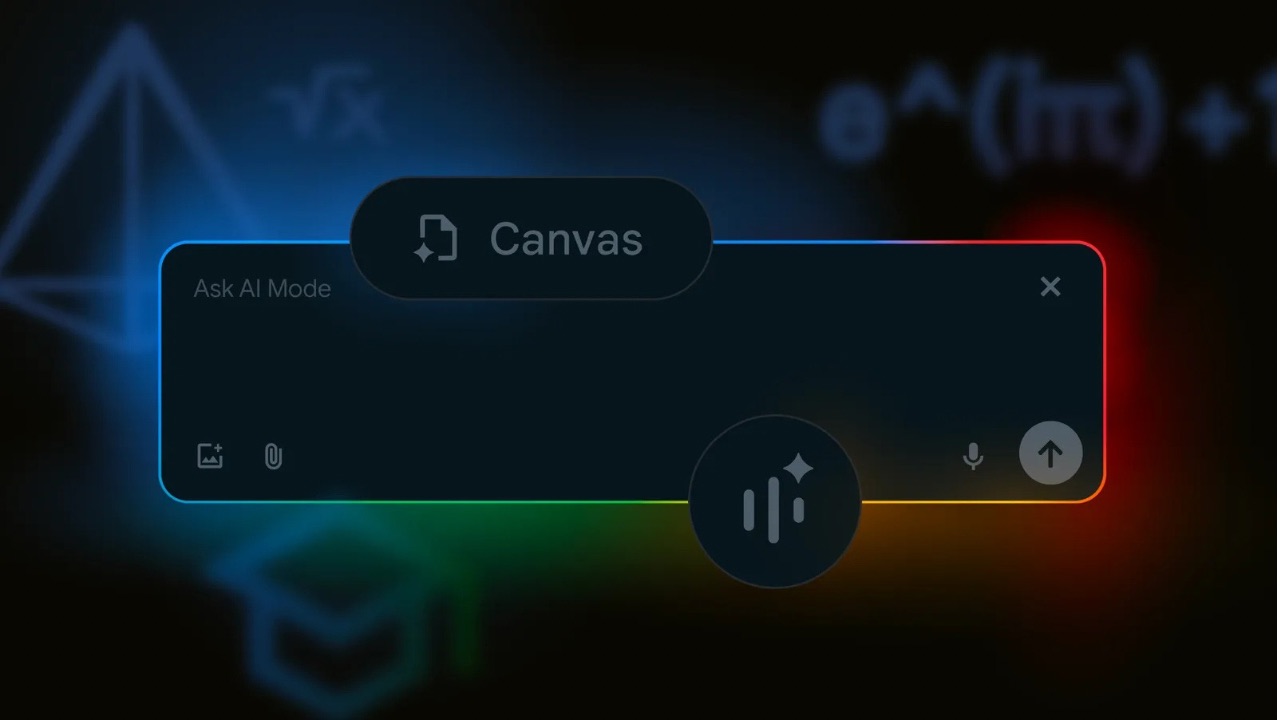

Canvas, Search Live with video, PDF uploads in AI Mode on Web

Google is bringing more power to AI Mode in Search, expanding its availability beyond mobile devices to desktop browsers. Previously available only in the Google App on Android and iOS, AI Mode now lets users ask complex questions about images directly from their desktop.

In the coming weeks, users will also be able to upload PDFs on desktop and ask detailed questions about their content. Whether it’s lecture slides from a psychology class or lengthy documents, AI Mode can analyze the file, pull relevant insights, and provide context-rich answers. The feature also includes links to helpful resources from the web, allowing users to explore further.

Currently available in English to users aged 18+ in the US and India, AI Mode blends document understanding with real-time web context for deeper, more informed responses. Google also confirmed that support for additional file types—such as those stored in Google Drive—is on the way in the coming months.

Then, with Canvas, you can build plans and organize information over multiple sessions in a dynamic side panel that updates as you go. For example, if you want to create a study plan for an upcoming test, just ask AI Mode, then tap on the “Create Canvas” button to get started. Soon, the new upload feature will also make it possible to customize your study guide (or whatever you need to create) with context from your files, like class notes or a course syllabus.

Furthermore, in the coming weeks, users enrolled in the AI mode Labs experiment in the U.S. will begin to see Canvas on desktop browsers. A “Create Canvas” option will appear when you ask for help creating or planning something.

Google is also introducing Search Live with video input, a new feature rolling out this week that brings advanced AI capabilities from Project Astra directly into AI Mode. This update allows users to interact with Google Search in real time using live video.

With Search Live, users can point their camera at something and ask questions on the spot—whether it’s a complex diagram, a product, or a real-world scene. The AI can see what you see, respond instantly, and explain tricky concepts as you go, while also providing helpful web links for deeper exploration.

The feature is also fully integrated with Google Lens. To try it out, open the Google app, tap on Lens, and select the Live icon. From there, you can point your camera at any scene or object and ask questions as they come to mind. Search uses AI Mode to process visual context—like different angles or moving objects—allowing for a dynamic, back-and-forth conversation.

Finally, Chrome users will soon see a new option in the address bar dropdown: “Ask Google about this page.” This feature offers a quick and convenient way to access Google Lens directly in Chrome.

By selecting the option, users can get helpful insights about the content of any webpage, powered by Lens and AI Mode.