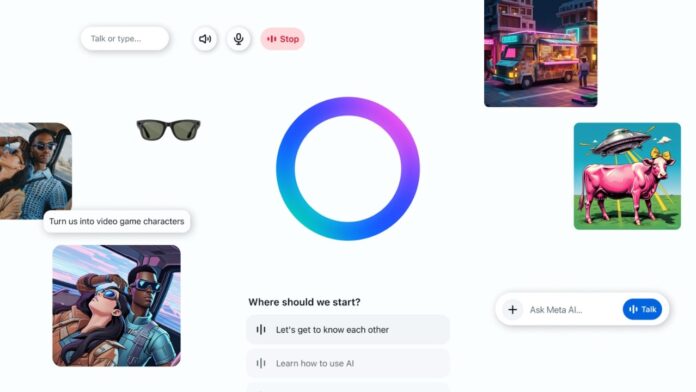

Meta has announced the launch of the Meta AI App which helps users experience personal AI designed around voice conversations with Meta AI inside a standalone app. This release is the first version and the company says it will be gathering feedback to make improvements in future versions.

“Meta AI is built to get to know you, so its answers are more helpful. It’s easy to talk to, so it’s more seamless and natural to interact with. It’s more social, so it can show you things from the people and places you care about. And you can use Meta AI’s voice features while multitasking and doing other things on your device, with a visible icon to let you know when the microphone is in use,” said Meta in a blog post.

Meta says it has improved its underlying model with Llama 4 to bring you responses that feel more personal and relevant, and more conversational in tone. And the app integrates with other Meta AI features like image generation and editing, which can now all be done through a voice or text conversation with your AI assistant.

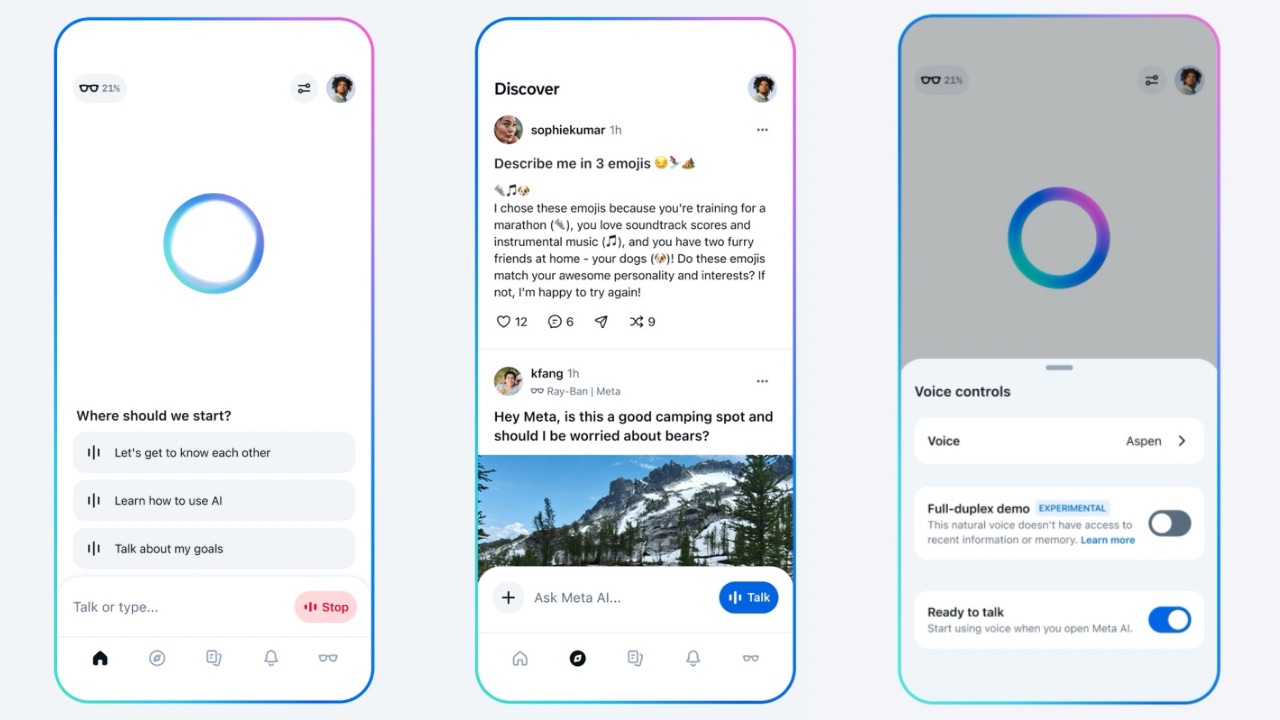

Meta has also included a voice demo built with full-duplex speech technology, that you can toggle on and off to test. This technology will deliver a more natural voice experience trained on conversational dialogue, so the AI is generating voice directly instead of reading written responses.

It doesn’t have access to the web or real-time information, though. Meta notes that users may encounter technical issues or inconsistencies as the feature has been given to the users on an experimental basis.

Voice conversations, including the full duplex demo, are available in the US, Canada, Australia, and New Zealand to start.

Next, you can tell Meta AI to remember certain things about you, and it can also pick up important details based on context. Your Meta AI assistant also delivers more relevant answers to your questions by drawing on information you’ve already chosen to share on Meta products, like your profile, and content you like or engage with.

Read More: Ray-Ban Meta Glasses India Launch Confirmed; Live Translation, Live AI Features Also Announced

Personalized responses are available today in the US and Canada. And if you’ve added your Facebook and Instagram accounts to the same Accounts Center, Meta AI can draw from both to provide an even stronger personalized experience for you.

The Meta AI app also includes a Discover feed, a place to share and explore how others are using AI. You can see the best prompts people are sharing, or remix them to make them your own. And as always, you’re in control: nothing is shared to your feed unless you choose to post it.

Further, the company is also merging the new Meta AI app with the Meta View companion app for Ray-Ban Meta glasses, and in some countries you’ll be able to switch from interacting with Meta AI on your glasses to the app. You’ll be able to start a conversation on your glasses, then access it in your history tab from the app or web to pick up where you left off. And you can chat between the app and the web bidirectionally (you cannot start in the app or on the web and pick up where you left off on your glasses).

Existing Meta View users can continue to manage their AI glasses from the Meta AI app – once the app updates, all your paired devices, settings and media will automatically transfer over to the new Devices tab.

Meta AI on the web is also getting an upgrade. It comes with voice interactions and the new Discover feed, just like you see in the app. The web interface has been optimized for larger screens and desktop workflows and includes an improved image generation experience, with more presets and new options for modifying style, mood, lighting and colors.

Meta is also testing a rich document editor in select countries, one that you can use to generate documents full of text and images and then export those documents as PDFs. And it is also testing the ability to import documents for Meta AI to analyze and understand.